When Google AI Overview was launched, there were concerns about AI software’s development and online misinformation becoming more present. These language models have been producing public content with Google for months, but consequently, research institutes and Google Scholar have been threatened by AI. After more pressing studies emerged, the interdisciplinary team at Google Jigsaw started reporting AI Overview misinformation trends for consumers.

What AI Software Contains

AI software combines statements generated from language models with live links online, and can cite sources without knowing whether the information has been verified. The system was designed to answer more complex and specific questions than a regular search. Several generative AI resources include ChatGPT (owned by OpenAI), Microsoft Copilot, Google Gemini (previously Bard), and Claude.

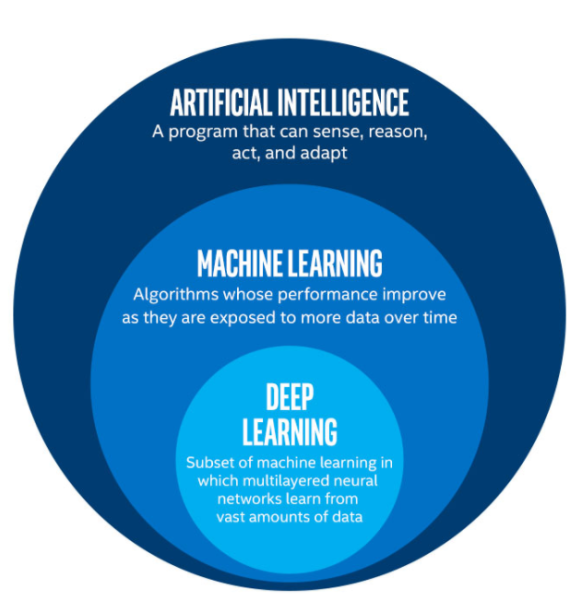

These AI software will often utilize machine learning – an AI subsystem that learns and improves from experience and sample data without being programmed. This means that AI requires algorithms and statistical models when drawing inferences from data.

Core machine learning methods include supervised learning, unsupervised learning, and deep learning. Supervised learning trains from labeled data sets, where the desired output from the data group has been predetermined based on the prompt; the AI must learn the correct mindset for handling that data. Unsupervised learning trains from unlabeled data, where the output does not have any specific requirements. Deep learning uses engineered artificial/deep networks for problem-solving, similar to the human brain.

Google AI Overview

In 2023, Google introduced Bard, an experimental conversational AI powered by the LaMDA language model. Google then launched the Search Generative Experience (SGE) for certain American consumers, which offered results similar to AI Overviews. In 2024, Google rebranded Bard as the generative language model known as Gemini, and following the change, Google renamed SGE to AI Overviews.

AI Overviews are Google generative summaries that appear on search results. Rather than breaking Google questions into multiple searches, immediate search results can combine into one Google response with linked citations.

Misinformation Trends

However, the generative AIs described above encompass many fabrications, manipulations, and harmful/unmonitored content that impact public information and Google Scholar publications.

There are two main categories of generative AI misuse: exploiting generative AI capabilities and compromising generative AI systems. Exploitations include creating false, realistic depictions and human impersonations, while some tech compromises include removing AI safeguards and using adversarial inputs that will cause malfunctions. Google Jigsaw has conducted research with misinformation creators and now helps people protect themselves from online manipulation. There have also been specific combinations of misuse, and Google has labeled these combinations as strategies. These misuses include opinion manipulation, child exploitation, extremism, harassment, forgery/scams, and concepts such as digital resurrection, resource development, and violating undressing services.

Concerns about Google AI Overview being implemented inside Google Scholarly (peer-reviewed) articles have also risen. Scientific papers with OpenAI writings found on Google Scholar were retrieved, downloaded, and analyzed using qualitative coding from Harvard. Most papers that were allegedly written with AI contained common phrases returned by conversational agents that use large language models (LLMs) like ChatGPT. Google Search was then used to determine the extent to which copies of these questionable ChatGPT-fabricated papers were available in various archives, citation databases, and social media platforms. This raises concerns about fabricated “studies” in research infrastructure overwhelming the scholarly communication system. There has also been the increased possibility that convincing, yet false, scientific content will be publicly available in academic search engines, particularly Google Scholar.

Teams across Google are using Jigsaw and other research units to develop better safeguards in generative AI tech. The joint initiative has gathered and analyzed nearly 200 media reports capturing public incidents of misuse. From these reports, Google has defined and categorized common tactics for misusing generative AI and found novel patterns in how these technologies are being exploited or compromised.